As you will have gathered from the web address or the title of this website, I own a C14 EdgeHD, purchased in 2010. It is one of the first ones made by Celestron, apparently still shipped from China to California in order to control and measure the optical chain which they stopped doing afterward.

It was bought from a company here in France called “Optique et Vision” http://www.ovision.com/ from Juan les pins, well known for providing quality instruments.

Being primarily interested in astrophotography, I chose this scope because it is quite versatile. It offers a long focal length (3910mm) for small objects such as galaxies or planets, and, there is the possibility for using it in Hyperstar mode with a 715mm focal length for wide field objects. In Hyperstar mode, it really is quite a “light bucket” with its 356mm primary mirror at F/D 2. This C14 offered the best of both worlds.

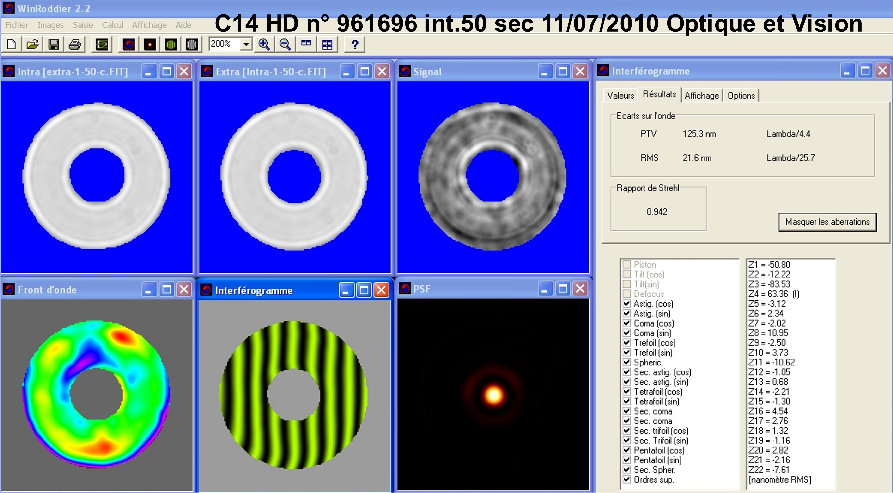

It came with a Roddier control report, performed on a star by the seller prior to shipping the OTA to me.

In addition, I drove the telescospe down to a now defunct company specialized in interferometric optical measurement. The report is available here:

and showed the telescope to be accurate to λ/4.

As for a mount, I chose a Losmandy Gemini Titan 1:50 with a load capacity of up to 45 Kg, which comfortably accomodate the C14 and more. Of course, this mount has its quirks and perks (especially the Gemini), but mechanically, it is superb. In my opinion, the Titan is to other mounts what a Chevy V8 is to 1.2 Liters engines! Good old and brutal American engineering. This mount is also fitted with a precision wormscrew manufactured by Optique & Vision.

I also purchased an Atik 11000 One Shot Color camera, (having previously owned a modified Canon 350D on C11 telescope). I know it is supposed to be slighly less sensitive than a monochrome camera but I never really wanted to bother with RGB filters. Its cooling capacity is up to -37 °C from ambient temperature.

Imaging 3910mm focal length can be quite challenging. To have proper guidance, there is no other choice but to use an Off Axis Guider (OAG) with a guiding camera which is an Atik 16IC. The AOG is also from Atik and offers just over the right backfocal distance for the main sensor with the added Crayford focuser (ideally 146mm).

All in all, I am quite satisfied with my equipment. Even though the aperture of the C14 is way too large for atmospheric turbulence at my location at primary focal, it is fantastic in Hyperstar wide field operations.